Twelve Labs, a startup co-founded by Jae Lee, is at the forefront of developing advanced AI models that can interpret video content as effectively as text. This innovation promises to unlock new applications in various industries. The company has garnered support from major tech players like Nvidia, Samsung, and Intel. By enabling users to search through videos for specific moments, summarize clips, or ask detailed questions about the footage, Twelve Labs aims to address the growing need for efficient video analysis solutions. The company's focus on customization and ethical considerations sets it apart in the competitive AI landscape.

Pioneering Video Search and Analysis Solutions

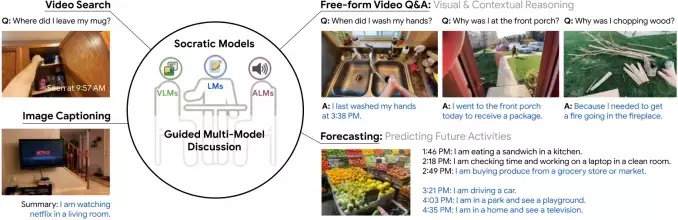

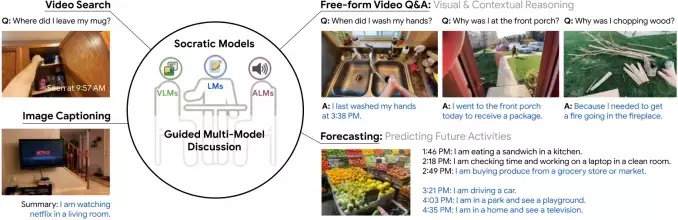

Twelve Labs has developed cutting-edge technology that transforms how we interact with video content. Traditionally, keyword searches only retrieve titles, tags, and descriptions, leaving the actual content unexplored. This limitation becomes particularly problematic as video becomes the fastest-growing and most data-intensive medium. Recognizing this gap, Jae Lee and his team created models that map text to what’s happening inside videos, including actions, objects, and background sounds. This approach allows users to pinpoint specific moments or angles within vast archives of video data without manual tagging.

The company's models offer powerful capabilities such as searching through footage, summarizing clips, and answering complex queries like "When did the person in the red shirt enter the restaurant?" These features are invaluable for applications ranging from ad insertion and content moderation to generating highlight reels. Unlike general-purpose multimodal models from companies like Google and OpenAI, Twelve Labs focuses exclusively on video, ensuring its products are optimized for this medium. The company trains its models on a mix of public domain and licensed data, avoiding customer data to maintain privacy and ethical standards. Despite challenges related to bias, Twelve Labs conducts rigorous testing and plans to release meaningful benchmarks to address these concerns.

Expanding Product Portfolio and Strategic Partnerships

Building on its core competency in video analysis, Twelve Labs continues to innovate by expanding into areas like "any-to-any" search and multimodal embeddings. One of their models, Marengo, can search across images and audio in addition to video, using reference recordings or clips to guide searches. Additionally, the Embed API creates mathematical representations of videos, text, images, and audio files, making it useful for anomaly detection and other applications. These advancements have attracted clients in enterprise, media, and entertainment sectors, including strategic partners Databricks and Snowflake, which are integrating Twelve Labs' tools into their offerings.

To further enhance its position, Twelve Labs recently secured $30 million in new investments, bringing its total funding to $107.1 million. This capital will fuel product development and hiring initiatives. With over 30,000 developers using its platform, Twelve Labs is well-positioned for growth. The addition of Yoon Kim as president and chief strategy officer signals an aggressive expansion plan, targeting new verticals like automotive and security. In-Q-Tel's involvement suggests potential applications in national security, aligning with the company's commitment to responsible and impactful technology development.