We stand on the cusp of a new era in artificial intelligence, as groundbreaking advancements continue to reshape the landscape. The recent introduction of OpenAI’s O3 model has sparked both excitement and skepticism within the tech community. This article delves into the implications of test-time scaling, its potential to revolutionize AI performance, and the challenges it presents.

Revolutionizing AI Performance with Unprecedented Efficiency

The Dawn of a New Era in AI Scaling

The narrative surrounding AI development has long been dominated by scaling laws—the principle that increasing computational power leads to better model performance. However, this approach has recently faced diminishing returns. Enter test-time scaling, a novel technique that promises to reignite progress. OpenAI's O3 model exemplifies this shift, demonstrating remarkable improvements in benchmarks such as ARC-AGI and a challenging math test.O3’s success on these tests has not gone unnoticed. Noam Brown, co-creator of OpenAI’s O-series models, highlighted the rapid advancements achieved within just three months since the launch of O1. Jack Clark from Anthropic echoed this sentiment, predicting faster AI progress in 2025 compared to 2024. While enthusiasm is palpable, caution remains essential until more comprehensive testing is conducted.The Mechanics Behind O3’s Breakthrough

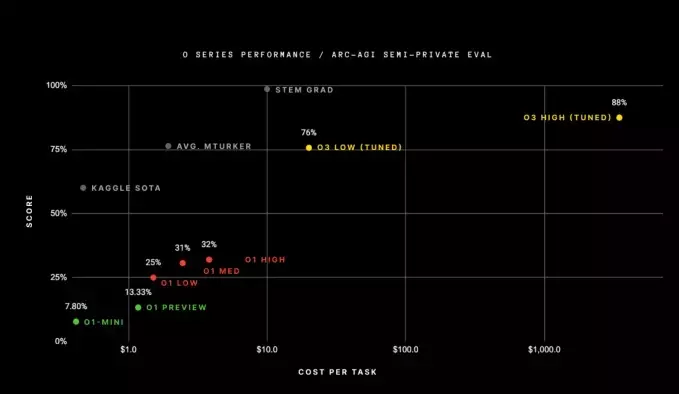

At the heart of O3’s impressive performance lies test-time scaling, a method that leverages increased compute during the inference phase. This involves using more powerful or numerous chips, or extending processing time to generate answers. Although the exact mechanisms remain undisclosed, the results speak volumes. O3 excelled on the ARC-AGI benchmark, scoring an unprecedented 88%, far surpassing previous models like O1, which scored only 32%.François Chollet, creator of the ARC-AGI benchmark, acknowledged O3’s ability to adapt to unfamiliar tasks, approaching human-level performance in this domain. Yet, he also pointed out the substantial cost—over $10,000 for each task. This raises concerns about practicality and affordability. Despite these costs, O3 represents a significant leap forward, indicating the potential of test-time scaling to enhance AI capabilities.Economic Implications and Practical Applications

The high compute requirements of O3 pose a critical question: Is this model economically viable? For everyday applications, the answer leans towards no. Answering simple queries would be prohibitively expensive. Instead, O3 may find its niche in high-stakes scenarios where precision and depth are paramount, such as strategic planning or complex problem-solving in finance and academia.Ethan Mollick, a Wharton professor, noted that institutions with deep pockets could afford O3 for specialized use cases. Even at current prices, reliable performance justifies the investment for critical decision-making. OpenAI has already introduced a $200 tier for high-compute versions of O1, hinting at potential subscription plans costing up to $2,000. These pricing strategies reflect the substantial resources required to operate advanced AI models like O3.Challenges and Future Prospects

Despite O3’s achievements, challenges persist. Notably, large language models still struggle with hallucinations—errors that produce misleading information. This issue hasn’t been fully resolved by test-time scaling, necessitating continued caution when relying on AI outputs. Moreover, the steep costs associated with O3 raise questions about scalability and broader applicability.Looking ahead, innovations in AI inference chips could unlock further gains in test-time scaling. Startups like Groq and Cerebras are pioneering solutions to make AI more efficient and cost-effective. As these technologies evolve, they may pave the way for more accessible and powerful AI systems.In conclusion, while O3 marks a pivotal moment in AI development, it also highlights the need for balanced progress. The future of AI hinges on addressing economic feasibility and technical limitations, ensuring that advancements benefit a wider range of users and industries.