The world of computing is witnessing a seismic shift as Nvidia, under the leadership of CEO Jensen Huang, claims its AI chips are advancing at an unprecedented rate, far outpacing the historical benchmarks set by Moore’s Law. This bold assertion comes at a critical juncture when many are questioning the sustainability of AI progress. Huang’s vision for the future of AI hinges on three key scaling laws that promise to revolutionize how we think about computational power and cost efficiency.

Transforming the Landscape of AI with Breakthrough Performance

The Evolution of Computing Power

For decades, Moore’s Law has been the guiding principle behind the exponential growth in computing capabilities. Coined by Intel co-founder Gordon Moore in 1965, this law predicted that the number of transistors on a chip would double every year, leading to a doubling of performance. This prediction held true for several decades, driving rapid advancements in technology and drastically reducing costs. However, in recent years, the pace of progress has slowed down, leading to concerns about the future of computing.Nvidia, however, is challenging this narrative. According to CEO Jensen Huang, the company’s latest data center superchip is over 30 times faster for running AI inference workloads compared to its previous generation. This remarkable leap in performance is not just a testament to Nvidia’s engineering prowess but also a sign that the company is pushing the boundaries of what’s possible in AI hardware development.The ability to innovate across the entire stack—from architecture and chip design to system integration and algorithms—has allowed Nvidia to move at a pace that surpasses Moore’s Law. Huang believes that by optimizing every aspect of the AI pipeline, Nvidia can continue to deliver groundbreaking performance improvements that will shape the future of AI.A New Era of AI Scaling Laws

Huang’s vision for the future of AI goes beyond the limitations of Moore’s Law. He introduces three active AI scaling laws that are redefining how we approach AI development:First, pre-training involves the initial phase where AI models learn patterns from vast datasets. This foundational step is crucial for building robust and versatile AI systems. Second, post-training fine-tunes these models using techniques like human feedback, ensuring they produce more accurate and contextually relevant outputs. Finally, test-time compute allows AI models to “think” longer during the inference phase, providing more thoughtful and nuanced responses.These scaling laws are not just theoretical concepts; they have practical implications for the development of AI reasoning models. For instance, OpenAI’s o3 model, which relies heavily on test-time compute, demonstrates the potential for AI to achieve human-level performance on complex tasks. However, the high computational costs associated with such models have raised concerns about their feasibility for widespread use.Despite these challenges, Huang remains optimistic. He argues that increased computing capability will drive down costs over time, making advanced AI models more accessible. The direct and immediate solution, according to Huang, lies in enhancing computational power, which will ultimately lead to lower prices and greater affordability.Nvidia’s Dominance in the AI Chip Market

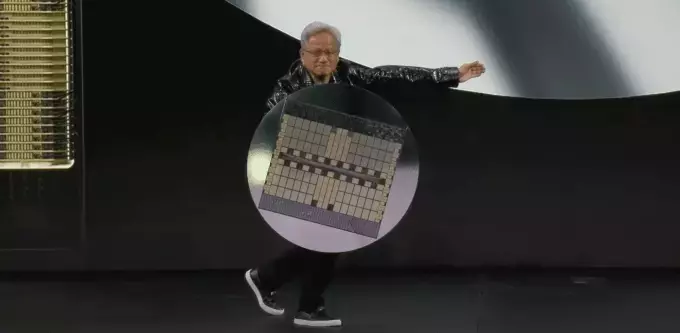

Nvidia’s H100s have long been the go-to choice for tech companies looking to train AI models. However, as the focus shifts towards inference, there are questions about whether Nvidia’s expensive chips will maintain their dominance. During his keynote at CES, Huang unveiled the GB200 NVL72, a chip that is 30 to 40 times faster at running AI inference workloads than the H100. This significant performance boost means that AI reasoning models, which require substantial computational resources during the inference phase, will become more cost-effective over time.The GB200 NVL72 represents a major milestone in Nvidia’s journey to create more performant chips. Huang emphasizes that higher performance leads to lower costs in the long run, a trend that has already been observed in the rapidly declining prices of AI models. As Nvidia continues to push the boundaries of AI hardware, it is poised to play a pivotal role in shaping the future of AI.The Road Ahead: A Thousandfold Improvement in a Decade

Looking back, Huang points out that Nvidia’s AI chips today are 1,000 times better than what they were a decade ago. This exponential improvement far exceeds the pace set by Moore’s Law, and Huang sees no signs of slowing down. The company’s relentless pursuit of innovation is driven by a commitment to delivering superior performance and cost efficiency.As AI continues to evolve, Nvidia’s advancements in chip technology will be instrumental in unlocking new possibilities. From healthcare to autonomous vehicles, the applications of AI are vast and varied. With each breakthrough, Nvidia is not only transforming the landscape of AI but also paving the way for a future where intelligent machines become an integral part of our daily lives.In conclusion, Nvidia’s CEO Jensen Huang’s vision for the future of AI is one of continuous innovation and unparalleled performance. By surpassing the limitations of Moore’s Law, Nvidia is setting new standards in the world of computing, ensuring that the next chapter in AI’s evolution is both exciting and transformative.