The era of artificial intelligence (AI) has ushered in a transformative wave, reshaping industries and pushing the boundaries of innovation. Amidst this revolution, a critical question emerges: Can synthetic data replace traditional methods to train AI models? This exploration delves into the potential, challenges, and implications of leveraging machine-generated data for AI development.

Unleashing the Power of Synthetic Data for Next-Generation AI Models

Understanding the Essence of AI Development

Artificial intelligence thrives on vast datasets to learn patterns and make accurate predictions. These systems are essentially statistical engines that require extensive examples to grasp nuances within data. For instance, an email classification model learns that "to whom" often precedes "it may concern." Annotations—labels or tags applied to data—play a pivotal role by guiding models to distinguish between various elements. Without proper annotations, a kitchen-labeled dataset might misidentify images as cows, underscoring the importance of precise labeling.The demand for labeled data has fueled a burgeoning market for annotation services. According to Dimension Market Research, this market is projected to grow from $838.2 million today to $10.34 billion over the next decade. Annotators, numbering in the millions, range from specialized experts earning decent wages to workers in developing countries receiving minimal compensation without benefits.The Challenges of Human-Generated Data

Human limitations pose significant hurdles in the quest for robust AI training. Annotators can only work at a certain pace, introducing biases and errors into their work. Moreover, human labor is costly, and acquiring large datasets is becoming increasingly expensive. Companies like Shutterstock charge millions for access to archives, while Reddit has generated substantial revenue from licensing data to tech giants such as Google and OpenAI.The scarcity of accessible data further complicates matters. Over 35% of top websites now block web scrapers, and around 25% of high-quality data sources have been restricted. If current trends persist, developers could face a data shortage by 2026-2032, according to Epoch AI projections. This scarcity, coupled with copyright concerns and ethical considerations, has prompted a reevaluation of data sourcing strategies.Synthetic Data: A Promising Alternative

Synthetic data offers a compelling solution to these challenges. By generating data through algorithms, companies can bypass many of the limitations associated with human-generated data. For example, Writer’s Palmyra X 004 model was trained almost entirely on synthetic data, costing just $700,000 compared to the estimated $4.6 million for a comparable OpenAI model. Microsoft’s Phi, Google’s Gemma, and Nvidia’s synthetic data generation tools highlight the industry's embrace of this approach.Gartner predicts that 60% of AI and analytics projects will utilize synthetic data by the end of this year. Meta’s Movie Gen and Amazon’s Alexa speech recognition models incorporate synthetic data to enhance performance. Luca Soldaini, a senior research scientist at the Allen Institute for AI, notes that synthetic data can swiftly expand upon human intuition, creating tailored datasets for specific applications.The Risks and Limitations of Synthetic Data

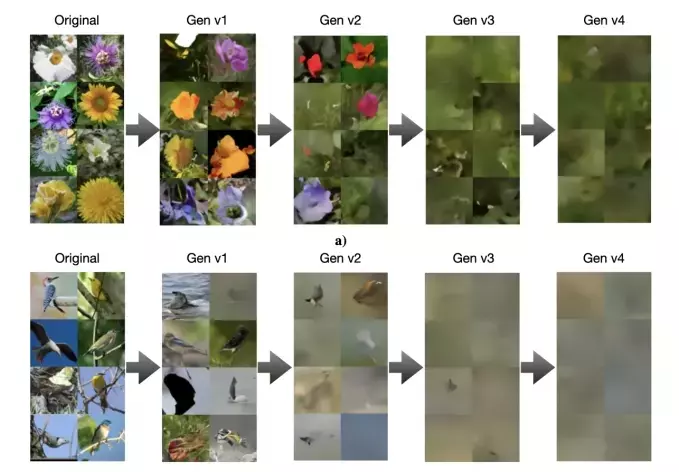

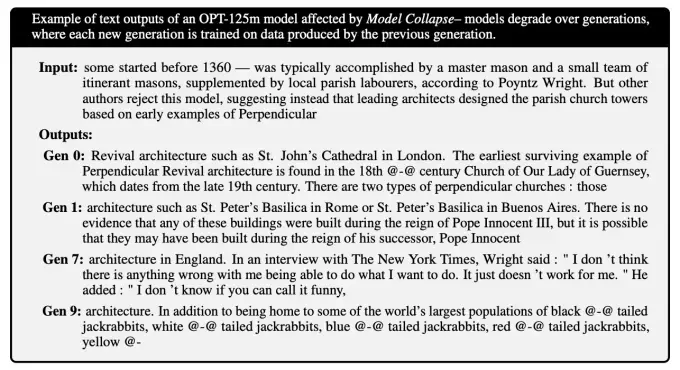

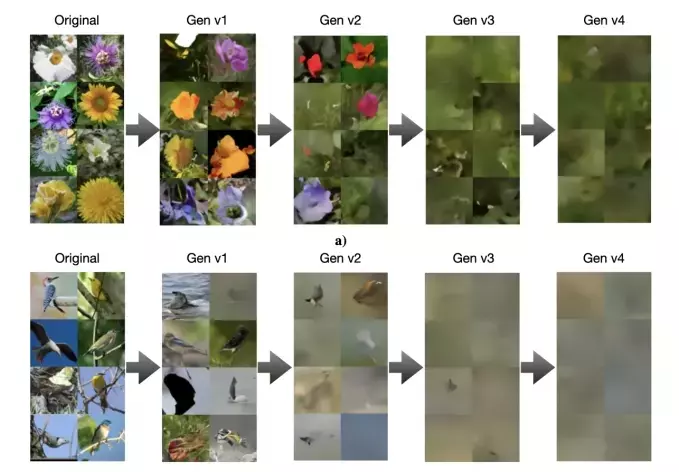

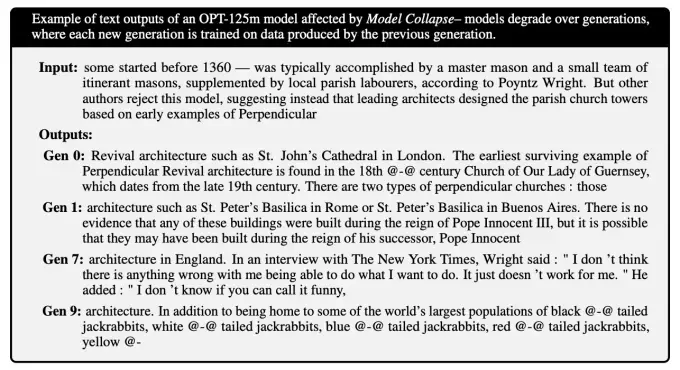

Despite its advantages, synthetic data is not without risks. The "garbage in, garbage out" principle applies here as well. Biases and limitations in the base data can propagate through synthetic outputs. For instance, a dataset with limited representation of diverse groups can lead to skewed results. Researchers at Rice University and Stanford found that over-reliance on synthetic data can degrade model quality and diversity after several generations of training.Os Keyes, a PhD candidate at the University of Washington, cautions against the hallucinations produced by complex models like OpenAI’s o1. These inaccuracies can compromise the reliability of models trained on synthetic data. Studies published in Nature reveal how error-ridden data leads to models producing irrelevant or generic responses, eventually resulting in model collapse.To mitigate these risks, thorough review and curation of synthetic data are essential. Pairing it with real-world data can enhance accuracy and prevent homogenization. As Soldaini emphasizes, synthetic data pipelines require careful inspection and improvement before deployment. While the vision of fully self-training AI remains elusive, human oversight remains crucial to ensure model integrity and functionality.