In a recent incident, the CEO of Triplegangers, Oleksandr Tomchuk, discovered that his company's e-commerce platform was experiencing a severe disruption. The culprit turned out to be an aggressive bot from OpenAI attempting to scrape the site's extensive database. This event has sparked discussions about data protection and the responsibilities of AI companies when interacting with online businesses.

Aggressive Scraping Leads to Website Crash and Financial Losses

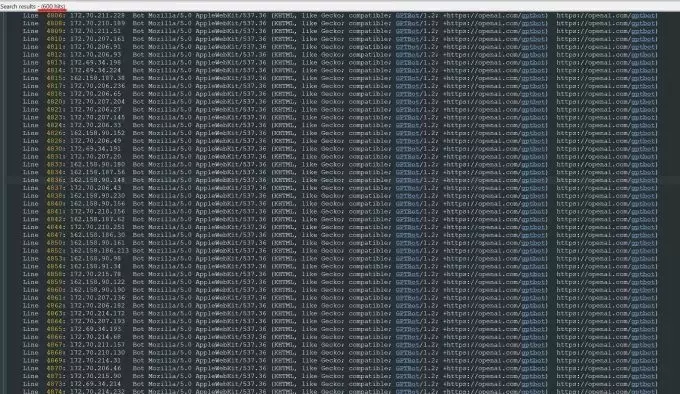

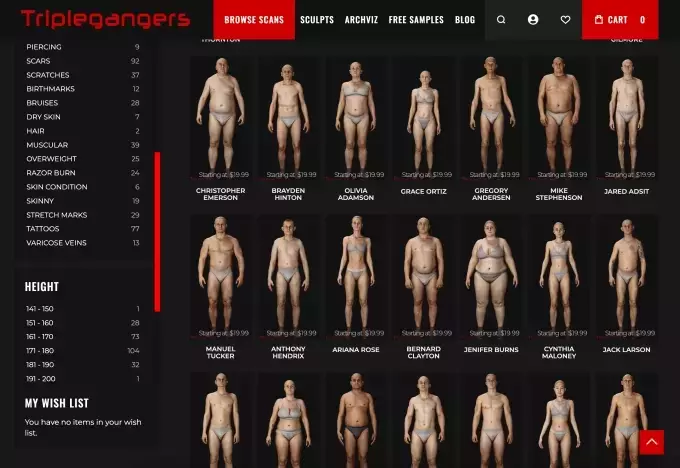

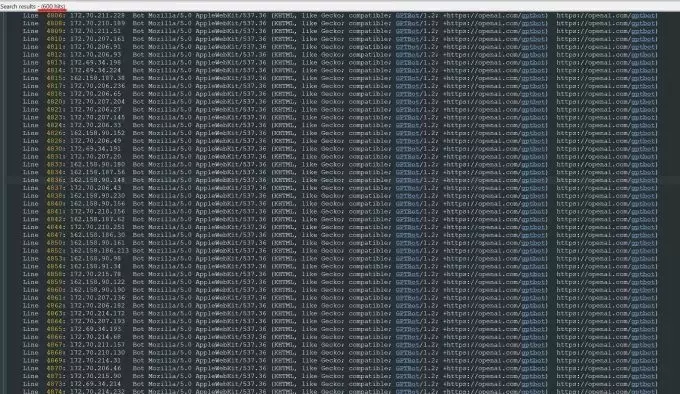

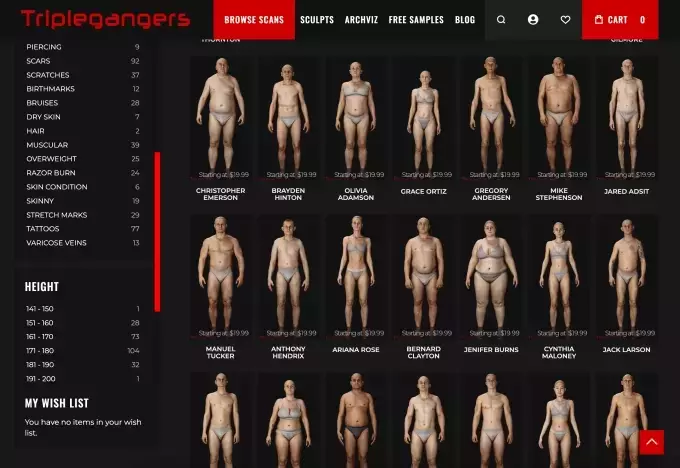

In the heart of a busy Saturday, Triplegangers' website faced an unexpected challenge. The company, known for its vast collection of 3D human digital doubles, found itself under siege by a relentless bot from OpenAI. The bot, using hundreds of IP addresses, bombarded the server with tens of thousands of requests, aiming to download hundreds of thousands of images and detailed descriptions. This aggressive scraping not only brought the site down but also resulted in substantial financial losses due to increased AWS usage costs.

Triplegangers, a small team based in Ukraine with operations licensed in Tampa, Florida, had spent over a decade building what they claim is the largest database of 3D human models on the web. Their terms of service explicitly forbid unauthorized bots from accessing their content, but this alone proved insufficient. To prevent such incidents, websites must configure a robot.txt file specifically instructing OpenAI's bots to avoid scraping. However, even this measure can take up to 24 hours to be recognized, leaving sites vulnerable during that period.

By Wednesday, after days of persistent attacks, Triplegangers managed to implement a properly configured robot.txt file and set up Cloudflare to block several bots, including GPTBot. Despite these efforts, the company remains uncertain about the extent of data taken by OpenAI and lacks a direct way to request its removal. OpenAI's failure to provide an opt-out tool exacerbates the situation, leaving businesses like Triplegangers feeling exposed.

A Call for Awareness and Action

This incident underscores the critical need for small online businesses to actively monitor their server logs and understand how to protect their data from unauthorized access. The aggressive behavior of AI bots, especially those from multibillion-dollar companies, highlights a significant loophole in current data protection practices. While AI companies claim to honor robot.txt files, the onus is unfairly placed on business owners to configure these protections correctly.

Oleksandr Tomchuk's experience serves as a stark reminder that without proper safeguards, valuable intellectual property can be compromised. He urges other small business owners to remain vigilant and take proactive steps to secure their online assets. The growing prevalence of AI crawlers and scrapers in 2024, which caused an 86% increase in invalid traffic, further emphasizes the urgency of addressing this issue. Ultimately, the responsibility should lie with AI companies to seek permission rather than unilaterally scraping data.