Google is on a journey to bring augmented reality and multimodal AI capabilities to glasses. However, the details of their plans remain somewhat hazy. Multiple demos of Project Astra have been seen, and now the company is set to release prototype glasses for real-world testing. This marks an important step in the evolution of computing.

Unlock the Future with Google's AR and AI Glasses

Project Astra: The Foundation of Google's Glasses

DeepMind's Project Astra is at the heart of Google's vision for glasses. It aims to build real-time, multimodal apps and agents with AI. Multiple demos have shown its potential running on prototype glasses. These glasses are armed with AI and AR capabilities, ready to transform the way we interact with technology.Google's decision to release these prototype glasses to a small set of selected users is a significant move. It shows their commitment to pushing the boundaries of what's possible in vision-based computing. The company is now allowing hardware makers and developers to build various glasses, headsets, and experiences around Android XR, their new operating system.The Coolness and Vaporware Aspect

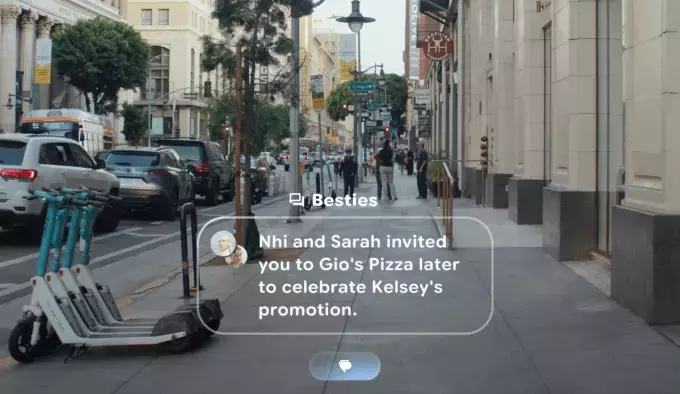

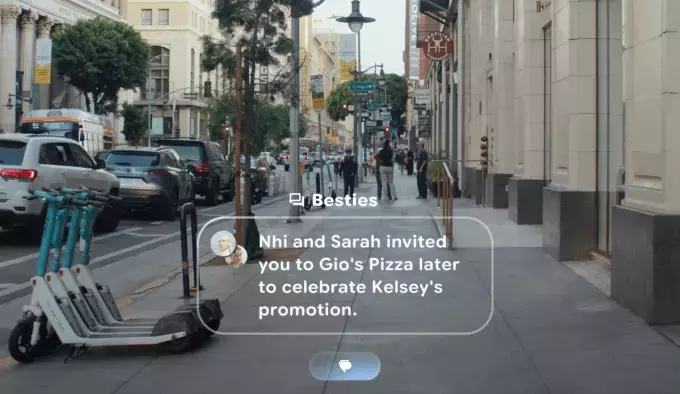

The prototype glasses seem incredibly cool, but it's important to note that they are essentially vaporware at this stage. Google still has no concrete details about the actual product or its release date. Despite this, the company clearly has ambitions to launch these glasses at some point, referring to them as the "next generation of computing" in a press release.Today, Google is focused on building out Project Astra and Android XR to make these glasses a reality. They have shared new demos showcasing how the prototype glasses can use AR technology to perform useful tasks like translating posters, remembering things around the house, and reading texts without reaching for the phone.Google's Vision for AR and AI Glasses

Android XR will support glasses for all-day help in the future. Google envisions a world of stylish, comfortable glasses that users will love to wear every day and that seamlessly integrate with other Android devices. These glasses will put the power of Gemini at users' fingertips, providing helpful information right when needed, such as directions, translations, or message summaries. It's all within reach, either in the line of sight or directly in the ear.Many tech companies have shared similar visions for AR glasses, but Google seems to have an edge with Project Astra. It is launching the app to beta testers soon, giving a glimpse of what's to come. I had the opportunity to try out the multimodal AI agent as a phone app this week and was impressed by its capabilities.Walking around a library on Google's campus and using the agent by pointing a phone camera at objects and talking to it, I witnessed real-time processing of voice and video. The agent could answer questions about what I was seeing and provide summaries of authors and books.Project Astra works by streaming pictures of the surroundings and processing voice simultaneously. Google DeepMind ensures that no user data is used for training the models, but the AI remembers the surroundings and conversations for 10 minutes, allowing it to refer back to previous information.Some members also demonstrated how Astra can read phone screens, similar to understanding what's through a camera. It can summarize Airbnb listings, show nearby destinations using Google Maps, and execute Google Searches based on what's on the phone screen.Using Project Astra on a phone is impressive, and it indicates the potential of AI apps. OpenAI has also demoed GPT-4o's vision capabilities, similar to Project Astra and set to release soon. These apps have the potential to make AI assistants more useful by extending beyond text chatting.It's clear that the AI model would be ideal on a pair of glasses, and Google seems to share this vision. But it may take some time to make this a reality.You May Like