In the realm of artificial general intelligence (AGI), a significant milestone has been reached. A well-regarded test, the ARC-AGI benchmark, which was introduced by Francois Chollet in 2019, is now closer to being solved. However, Chollet and his team claim that this development actually highlights flaws in the test's design rather than marking a genuine research breakthrough.

ARC-AGI: A Key Test for AGI Progress

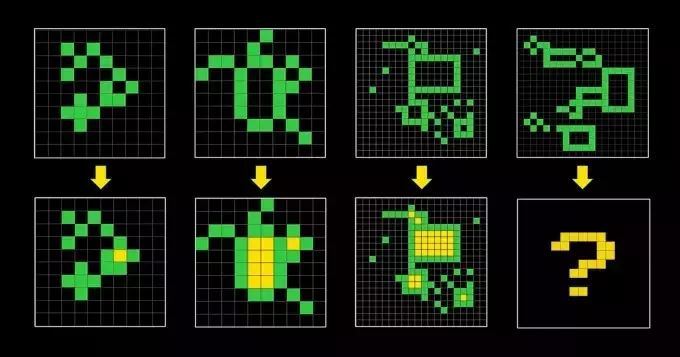

In 2019, Francois Chollet, a prominent figure in the AI world, introduced the ARC-AGI benchmark. This benchmark, short for “Abstract and Reasoning Corpus for Artificial General Intelligence,” was designed to assess whether an AI system can effectively acquire new skills outside the data it was trained on. Chollet asserts that ARC-AGI remains the only AI test that measures progress towards general intelligence, although other tests have been proposed.Before this year, the top-performing AI could only solve approximately two-thirds of the tasks in ARC-AGI. Chollet attributed this to the industry's overemphasis on large language models (LLMs), which he believes lack true “reasoning” capabilities.“As LLMs mainly rely on memorization, they struggle with generalization,” he stated in a series of posts on X in February. “They fail when faced with anything not in their training data.”Indeed, LLMs are statistical machines. By training on a large number of examples, they learn patterns to make predictions, such as the typical sequence of “to whom” followed by “it may concern” in an email.Chollet argues that although LLMs may be able to memorize “reasoning patterns,” it is unlikely that they can generate “new reasoning” in novel situations. “If you need to be trained on numerous examples of a pattern to learn a reusable representation, you are essentially memorizing,” he contended in another post.To encourage research beyond LLMs, Chollet and Mike Knoop, the co-founder of Zapier, launched a $1 million competition in June to develop open-source AI capable of surpassing ARC-AGI. Out of 17,789 submissions, the best-performing model scored 55.5%, which is about 20% higher than the 2023 top scorer but still short of the 85% “human-level” threshold required to win.However, Knoop emphasizes that this does not mean we are approximately 20% closer to achieving AGI.Today, we are excited to announce the winners of the ARC Prize 2024. We are also publishing an extensive technical report detailing what we have learned from the competition (link in the next tweet).The state-of-the-art performance in ARC-AGI has seen a remarkable increase from 33% to 55.5%, the largest single-year improvement since 2020. This significant leap showcases the potential and progress in the field.In a blog post, Knoop pointed out that many of the submissions to ARC-AGI have achieved results through “brute force” rather than true intelligence. He suggested that a “large fraction” of ARC-AGI tasks “[do] not carry much useful signal towards general intelligence.”ARC-AGI consists of puzzle-like problems where an AI must generate the correct “answer” grid given a grid of different-colored squares. These problems are designed to force the AI to adapt to new problems it has not encountered before. But it remains unclear whether they are truly achieving this goal.Tasks in the ARC-AGI benchmark. Models must solve ‘problems’ in the top row; the bottom row shows solutions. Image Credits: ARC-AGIKnoop acknowledged that “[ARC-AGI] has been unchanged since 2019 and is not perfect.”Francois and Knoop have also faced criticism for overemphasizing ARC-AGI as a benchmark for AGI at a time when the definition of AGI is highly debated. One OpenAI staff member recently claimed that if AGI is defined as AI “better than most humans at most tasks,” then AGI has “already” been achieved.Chollet and Knoop plan to release a second-generation ARC-AGI benchmark and organize a 2025 competition to address these issues. “We will continue to guide the efforts of the research community towards what we consider the most important unsolved problems in AI and accelerate the path to AGI,” Chollet wrote in an X post.Fixing the shortcomings of the first ARC-AGI test will not be an easy task. If the flaws of the initial test are any indication, defining intelligence for AI will be as challenging and controversial as it has been for human beings.